It is October, the Cybersecurity Awareness Month. Thus, there’s no better time than now to audit potential security risks with AI generated code.

What if I told you can reduce vulnerabilities in their AI generated code in less than 3 hours?

In fact, what if I said this could be done at no additional cost, workload, tech stack etc?

This 5-7 Minutes read blog will get you the simple yet effective steps to start your AI code security journey.

So then, let’s get started.

Table of Contents

- Introduction – The Hidden Crisis in AI Code.

- What You’ll Learn – Your Complete Security Roadmap.

- Three Critical Security Gaps – What Most Organizations Miss.

- Your 3-Step Action Plan – Immediate Protection Strategies.

- Implementation Roadmap – Making Security Stick.

- Conclusion – Securing Your AI-Driven Future

The Hidden Crisis Hiding in Your Codebase

Last week, I was talking to a CISO at a Fortune 500 company. Smart guy, great team, all the industry standard security tools.

But when I asked him about AI-generated code in their environment, he went quiet.

We know developers are using ChatGPT and GitHub Copilot,” he said.

But honestly?

We have no idea how to secure it.

He’s not alone.

In fact, Google CEO Sundar Pichai said 25% of the code at google is AI generated as per Forbes.

AI tools are churning out thousands of lines of code daily. Developers love the speed. Management loves productivity gains.

But here’s what nobody’s talking about. Nearly half of all AI-generated code contains critical security vulnerabilities.

In other words, every second piece of code you are shipping might have a security hole.

I’ve seen this pattern dozens of times now. Organizations rush to adopt AI coding tools, celebrate the productivity boost, then get blindsided six months later when vulnerabilities start surfacing in production.

Our team just Replit’s AI app generator to build a complete forum app in minutes – with authentication, posts, comments, and user profiles.

The finished app contained 11 serious security vulnerabilities. Check out this LinkedIn post by our CTO Bar Hofesh.

The scary part?

Most security teams don’t even know which code in their environment was AI-generated.

What You’ll Learn from This Guide

Look, I’m not here to scare you away from AI coding tools. They’re incredible when used right. But after working with hundreds of organizations on this exact problem, we’ve seen what works and what doesn’t.

This guide will give you:

- The reality check – Why 45% of AI-generated code contains vulnerabilities (and why that number is climbing)

- The blind spots – The three critical security gaps that catch even sophisticated security teams off-guard.

- The solution – A proven 3-step framework you can implement starting tomorrow – no budget required.

- The roadmap – How to roll this out across your organization without slowing down development.

- The peace of mind – Specific tools and processes that actually identify AI code vulnerabilities before they hit production.

If your developers are using any AI coding assistant – and let’s be honest, they probably are whether you know it or not – this could be the difference between staying ahead of the curve and becoming another breach statistic.

Three Critical Security Gaps Organizations Miss

Gap No 1 – The It Works, So It’s Fine Trap

Here’s the thing about AI-generated code – it’s really good at solving functional problems.

Need a function to parse JSON?

AI nails it.

Want to integrate with an API?

Done in seconds.

But, here’s the thing. Functional and secure are different things.

I was reviewing code at a fintech startup last month. Their developers had been using AI to speed up their payment processing integration.

The code worked perfectly in testing.

It handled edge cases beautifully. It even had decent error handling.

But it also had three separate SQL injection vulnerabilities.

The AI had generated code that worked exactly as requested.

But it used concatenated queries instead of parameterized statements.

Classic mistake that human developers rarely make anymore.

Thus, AI tools stumble on such surfaces regularly.

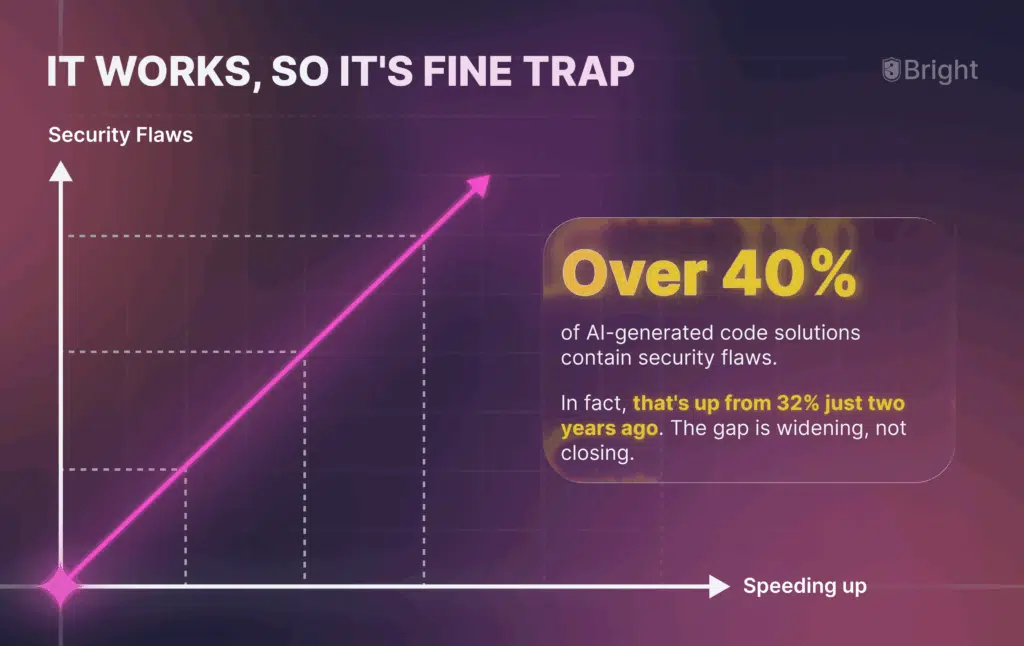

Recent studies show that over 40% of AI-generated code solutions contain security flaws.

In fact, that’s up from 32% just two years ago. The gap is widening, not closing.

Also, feel free to check out the Top 5 LLM Application Security risks in 2025.

Gap No 2: The Hallucination Problem Nobody Talks About

AI doesn’t just make mistakes. It hallucinates.

Last month, I saw AI-generated code that referenced a “secure_hash_md5()” function that doesn’t exist in any standard library.

The developer didn’t catch it because the function name looked legitimate.

The code compiled because they had created a wrapper function with that name.

Guess what that wrapper function did?

It used MD5 – a hashing algorithm that’s been considered cryptographically broken for over a decade.

AI systems experience hallucinations when generating code. This creates solutions that look professional but contain fundamental security flaws. Developers accept these suggestions without deeper review. Hence, vulnerabilities slip through.

I’ve seen AI recommend

- Outdated encryption methods.

- Non-existent security libraries.

- Authentication patterns that were deprecated years ago.

- Network configurations with known security holes.

Gap No 3: The Scale Nightmare

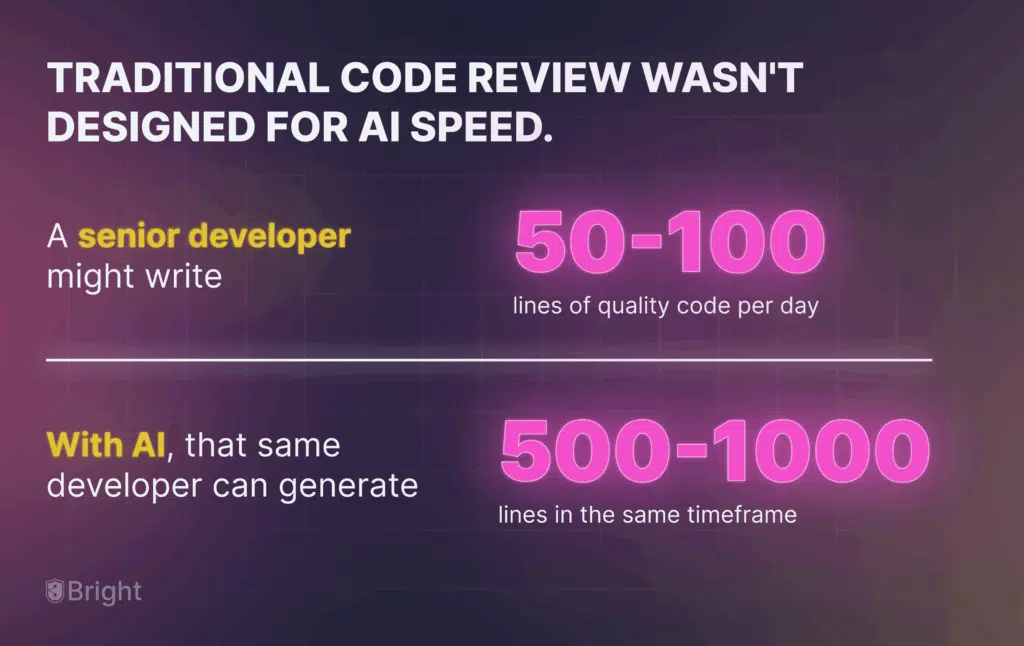

Traditional code review wasn’t designed for AI speed.

A senior developer might write 50-100 lines of quality code per day. With AI, that same developer can generate 500-1000 lines in the same timeframe.

Your security review processes weren’t built for that volume.

I worked with a company where their AI-powered development team was shipping code 5x faster than before.

Great for delivery timelines. Terrible for their security team. The security team suddenly had to review 5x more code with the same resources.

The result?

They started spot-checking instead of comprehensive reviews.

Three months later, they discovered 47 security issues in production – all in AI-generated code that had slipped through their overwhelmed review process.

AI tools have failed to defend against Cross-Site Scripting vulnerabilities in 86% of relevant code samples.

Thus, this isn’t just a training problem. It’s a systemic issue with how AI approaches security.

Your 3-Step AI Code Security Action Plan

After working through this problem with dozens of organizations, here’s what actually works:

Step 1: Get Visibility Into Your AI Code

You can’t secure what you can’t see.

Start simple – Implement a tagging system. This helps you know which code was AI-generated. This isn’t about restricting AI use. It’s about bringing visibility to your blind spots.

How to do it?

- Add comments or tags when developers use AI assistance.

- Implement automated detection for common AI code patterns.

- Track AI usage across different teams and projects.

I helped a mid-size company implement this, and they discovered that 60% of their recent commits contained some AI-generated code.

The CISO’s reaction?

Now I understand why our vulnerability scans have been lighting up.

Step 2: Deploy AI-Aware Security Scanning

Standard security scanners miss AI-specific vulnerability patterns. You need tools trained to catch the unique ways AI code fails.

The vulnerability detection rate in AI-generated code can be 2-3x higher than human-written code, but only if your tools know what to look for.

Focus areas:

- Injection vulnerabilities (AI loves string concatenation).

- Authentication bypasses (AI often simplifies auth logic).

- Data exposure issues (AI tends to over-share data between functions)

Bright STAR ( Security Testing and Auto remediation ) – Our Human + AI code scanner yields the following results.

- < 3 % False Positives.

- 98% Faster Remediation.

Step 3: Create AI-Specific Review Checkpoints

This isn’t about slowing down development. It’s about adding targeted checkpoints where AI code is most likely to fail.

The approach that works:

- Flag security-critical AI-generated code for human review.

- Create AI-specific security checklists.

- Train your team to recognize common AI vulnerability patterns.

One company I worked with reduced their AI code vulnerability rate from 45% to 12% just by implementing focused review checkpoints.

Same development speed, dramatically better security posture.

Making Your Action Plan Stick: The Implementation Roadmap

Here’s how to roll this out without causing a developer revolt:

Week 1: The Reality Check

- Audit current AI usage (spoiler: it’s probably higher than you think).

- Establish baseline metrics for vulnerability rates.

- Get buy-in from development leads.

Week 2-3: Tool Integration

- Integrate AI-aware scanning into your CI/CD pipeline.

- Set up automated flagging for AI-generated code.

- Train your security team on AI vulnerability patterns.

Week 4+: Process Refinement

- Monitor detection rates and adjust thresholds.

- Gather feedback from development teams.

- Scale successful practices across the organization.

The key is starting with visibility, then building security controls around what you discover.

The Real Cost of Doing Nothing

I’ve seen what happens when organizations ignore this problem.

Last year, a retail company had a breach that started with an AI-generated API endpoint. The AI had created code that worked perfectly for their use case.

However, it exposed customer data through a parameter manipulation vulnerability.

60% of IT leaders describe the impact of AI coding errors as very or extremely significant. But the real cost isn’t just the immediate damage – it’s the long-term technical debt and compliance headaches.

[INSERT_METRIC_OR_PROOF: Add industry-specific breach cost data for your target audience]

When regulatory frameworks start including AI-specific security requirements (and they will), organizations that haven’t addressed this will face a compliance nightmare.

Your Next Steps: From Awareness to Action

This Cybersecurity Awareness Month, make AI code security your priority.

Start with one simple question. How much AI-generated code is currently running in our production environment?”

If you don’t know the answer, that’s your first red flag.

This week

- Survey your development teams about AI tool usage.

- Run a scan specifically looking for AI code vulnerability patterns.

- Identify your highest-risk AI-generated code areas.

Next week

- Implement basic tagging for AI-assisted development.

- Add AI-aware rules to your security scanning tools.

- Create review checkpoints for security-critical AI code

Small steps now prevent major headaches later.

Conclusion: Securing Your AI-Driven Future

AI coding tools aren’t going away. The productivity gains are too significant, and the competitive advantages too clear.

But there’s a right way and a wrong way to do this.

The wrong way is what most organizations are doing right now: embracing AI coding with no security strategy, hoping the problems will solve themselves.

The right way is what I’ve outlined here. Visibility first, targeted scanning second, focused review processes third.

Organizations that get this right will have the best of both worlds – AI-powered productivity with enterprise-grade security.

Those that don’t will keep playing security whack-a-mole, patching AI-generated vulnerabilities in production.

Which would you rather be?

CTA

Ready to get visibility into your AI code security risks?

Don’t wait for a vulnerability to surface in production.

Get a quick tour of Bright STAR – Our Autonomous Application Security Testing and Remediation Platforms to auto-detect, auto-correct, and auto-protect your applications