AI-generated code is basically the holy grail of developer tools of this decade. Think back to just over two years ago; every third article discussed how there weren’t enough engineers to answer demand; some companies even offered coding training for candidates wanting to make a career change. The demand for software and hardware innovation was only growing, and companies were asking themselves, with increasing concern, “How do we increase velocity?” Then OpenAI came out with ChatGPT, and all of a sudden, LLMs and AI-powered tools and platforms were everywhere. One of which is AI-generated code.

In this post, I will walk you through security in the context of AI-generated code and show you a live example of how DAST security testing can be applied to AI-generated code.

Not all code is created equal

If it looks like a duck and quacks like a duck, is it a duck? Or, in the case of AI-generated code, if it looks like code and runs like code, is it good code? One of the most common misconceptions regarding Generative AI, and LLMs in particular, is that they understand the questions they are being asked and apply the same reasoning to their answer as a person would. However, the only thing that these models do is predict the answer, be it question-answering or code completion, based on their training data. Unlike their traditional machine learning model counterparts, whose training data is meticulously gathered, cleaned, and vetted, Gen AI models are basically trained on the entire web and more (if available in proprietary datasets, for example).

In the case of AI-generated code, this means that the training data is basically all publicly accessible code repositories, documentation, and examples – the good, the bad, and the ones riddled with security vulnerabilities. Bottom line, while LLMs, for the most part, will sound correct, knowledgeable, and confident in their answers, they are not “thinking” what would be the best completion of your code, only predicting a completion based on what they have seen previously in the wild.

This isn’t a theoretical problem

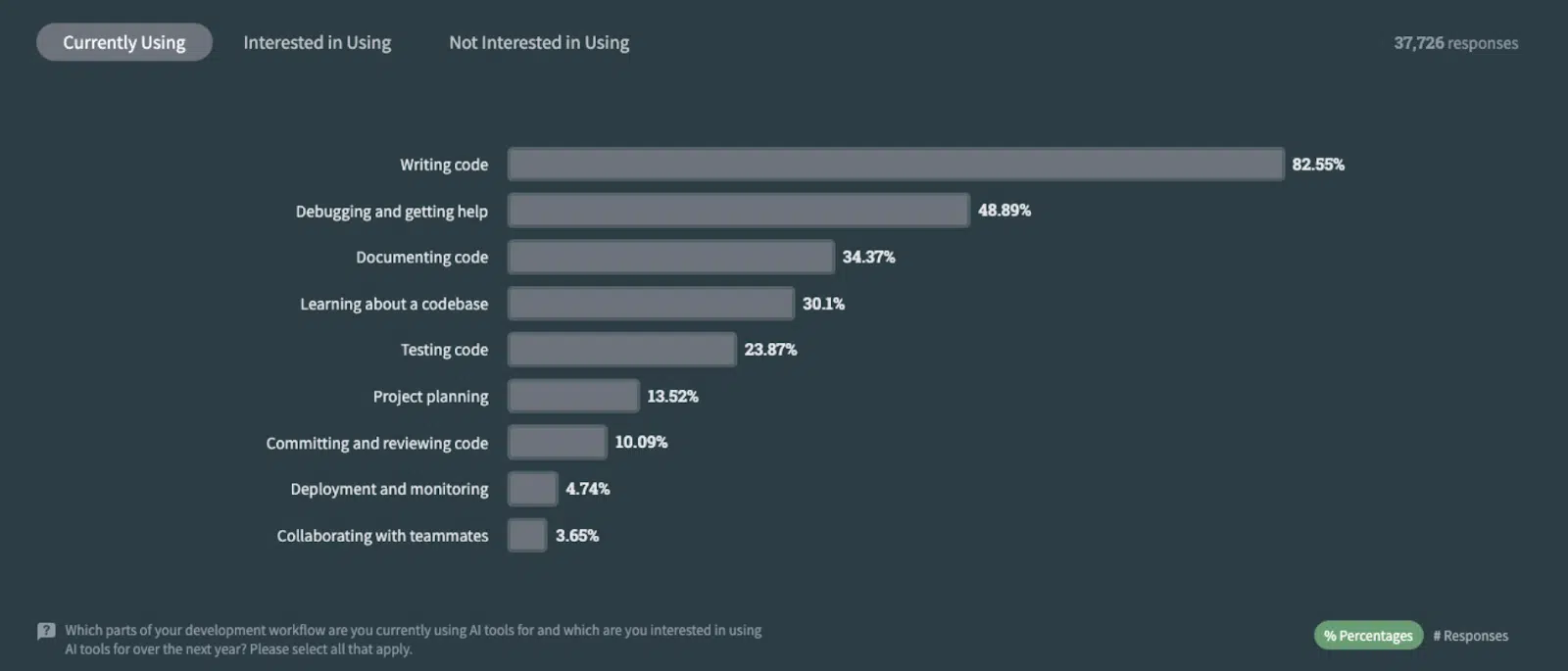

In the StackOverflow 2023 survey, over 82% of the respondents currently use AI tools to write code, and 42% answered that they trust the accuracy of the output, while 31% are on the fence.

According to Gartner, 75% of enterprise software engineers are expected to use AI coding assistants by 2028. Thus, we can expect that not only will code and release velocity increase but that organizations’ overall code volume will also increase.

Enter AI-generated security vulnerabilities on steroids

First, let’s differentiate between LLM security issues and LLM-generated software or application security issues.

- LLM security vulnerabilities are manipulations of a deployed LLM, say in an AI-powered chatbot on your website, to get it to provide access to restricted data or operations. Typically, prompt engineering and guardrails will be applied here to test and safeguard the model, but this should also be tested on the application level.

- LLM-generated software or application security vulnerabilities include code, web, API, and business logic security vulnerabilities.

In the case of code generation, most vulnerabilities will fall under LLM-generated software or application security vulnerabilities, but AI-generated code is 4X more prone to security vulnerabilities, according to Gartner. With the overall volume of code only increasing, this is further compounding existing issues such as security testing happening too late in the SDLC and the need for more shift-left testing, developers working in different tools than AppSec, and not always having the necessary security knowledge needed to resolve security vulnerabilities.

This is where Bright’s security unit testing extension comes into play.

Bright for Copilot

Bright’s LLM-powered security unit testing extension for GitHub Copilot helps organizations accelerate code generation without introducing security vulnerabilities. It puts DAST in the hands of developers at the IDE and unit testing levels, letting them leverage security testing from the get-go without having to become security gurus.

Emerging security threats in AI-generated code

AI-generated code will not be going anywhere. On the contrary, developers are already using it in mass in individual plans, if not organizational settings. If history teaches us anything, it is that when developers adopt engineering tools from the grassroots, it is only a matter of time before they make it into the enterprise. The benefits for both are abundantly clear, greater productivity and velocity.

That said, AI-generated code is a brand new attack surface that modern enterprises need to evaluate and safeguard. That is why Bright is developing extensions and capabilities geared to empower developers to do security testing throughout their SDLC and across new evolving attack surfaces.